Oracle Loader for Hadoop: OCI Direct

Path

In

the previous tutorial post we discussed the basic mechanics and structure of an

OLH job using JDBC. In this post we move

on to the more mainstream method used for OLH, specifically OCI Direct

Path. The focus here is on loading

Oracle tables with really big data, and we will discuss how to do this

efficiently, and provide some basic rules for optimizing load performance. We will discuss the mechanics of

submitting an OLH job, and then take a dive into why this OLH load method is

what you want to use for most situations.

The Structure of an OLH Command using OCI Direct Path

The

structure of an OLH command using OCI Direct Path is very similar to the

structure we described for submitting a JDBC load:

$HADOOP_HOME/bin/hadoop jar

$OLH_HOME/jlib/oraloader.jar

oracle.hadoop.loader.OraLoader

-D

oracle.hadoop.loader.jobName=OLHP_fivdti_dtext_oci_10_723

-Doracle.hadoop.loader.loaderMapFile=file:/tmp/loaderMap_fivdti.xml

-D mapred.reduce.tasks=10

-D mapred.input.dir=/user/olh_performance/fivdti/56000000_90

-D

mapred.output.dir=/user/oracle/olh_test/results/fivdti/723">

-conf /tmp/oracle_connection.xml

-conf /tmp/dtextInput.xml

-conf /tmp/dlOutput.xml

Aside from cosmetic changes (e.g. the job name) the key

differences between this and the JDBC command discussed in lesson 2, is a

non-zero value for “mapred.reduce.tasks” property and a different conf file for

specifying the type of output (i.e. the

bold italic lines above).

The

new file we are using, “dlOutput.xml”, specifies the output format is OCI

Direct Path (and not JDBC):

<configuration>

<property>

<name>mapreduce.outputformat.class</name>

<value>oracle.hadoop.loaderlib.output.OCIOutputFormat</value>

</property>

</configuration>

So switching from JDBC to OCI Direct Path is trivial. A little less trivial is why OCI Direct Path is

preferred and what rules you should know to make this type of loading perform

well and to maximize efficiency.

Rule 1: When using OCI Direct Path the target table must be

partitioned.

This might sounds like a constraint, but practically

speaking it isn’t.

Exploiting Oracle Table Partitioning

![Partitions]()

A full understanding

of Oracle table partitioning goes beyond the scope of this tutorial, and you

would be advised to read related documentation that gets into this subject in

depth, but for the sake of readers who live mostly in the world of Hadoop and

have a limited understanding of Oracle let’s briefly outline the basics of what

Oracle table partitioning is and why it is essential to understand.

Rule 2: If you are loading really big data into an Oracle table, your

Oracle table will want to be partitioned.

The reason is pretty simple. Table partitions are Oracle’s

method of breaking up a table into workloads that can be optimized transparently by SQL. In the same way MapReduce

jobs scale out by breaking up a workload into data blocks and scheduling tasks

to work in parallel against data blocks, Oracle SQL does the same with

partitions. This is not only true for querying but it is also true for doing big loads.

Let’s look at the “fivdti” table we have

been using. A flat table would be

declared like this:

CREATE

TABLE fivdti

(f1 NUMBER,

i2 INT,

v3 VARCHAR2(50),

d4 DATE,

t5 TIMESTAMP,

v6 VARCHAR2(200),

i7

INT);

A partitioned table declaration, using a hash

partitioning scheme would look like this:

CREATE

TABLE fivdti

(f1 NUMBER,

i2 INT,

v3 VARCHAR2(50),

d4 DATE,

t5 TIMESTAMP,

v6 VARCHAR2(200),

i7

INT)

PARTITION BY HASH(i7)

PARTITIONS 10

PARALLEL;

With the simple addition of the partition clause at the

bottom of the CREATE TABLE clause, you’ve empowered Oracle to exploit big

optimizations for processing. The clause tells Oracle that the table should be

divided into 10 partitions, and the partition for a row is determined by

performing a hash operation on the value of the i7 column. If you were to compare load rates using OLH,

SQL*Loader, or SQL for the flat table and the table that is partitioned, you

would typically see a dramatic difference that favors partitioning. The same holds true for SQL. When querying partitioned tables, SQL can do

all sorts of tricks under the covers to use parallel query technology that

subdivides a job and maximizes parallel CPU and IO.

Oracle table partitioning comes in various flavors such as hash, list, and range. They also can be composites of

the same. OLH supports all partition methods except reference

partitioning and virtual column-based partitioning.

Advantages of OCI Direct Path

OCI Direct

Path is a well-established method of loading data into Oracle using OCI

(Oracle’s C based client interface) or SQL*Loader. It is a code path dedicated to bulk loading

and its key advantage is that it bypasses Oracle SQL, which makes it very

efficient.

Virtually

all relational database systems including Oracle are built on two layers of software:

one for managing data at the row level (i.e. SQL), and another for managing

data at the block level (i.e. storage). Loading through SQL (i.e. Oracle’s front door) is expensive. It’s okay when one is inserting a singleton

row or a small array of rows, but it uses a lot of code path before the rows

are passed onto storage and are copied into data blocks that ultimately get

written to disk.

OCI Direct

Path load is a short cut with an API whose code path both on the client and in

the Oracle database is streamlined for loading. It does the work of preparing rows for storage in data blocks using

client resources. (For our case the

client is OLH running in Hadoop.) It

then sends blocks of rows to Oracle’s storage layer in a form close to what

will be written to disk on a code path that minimizes contention: rows don’t

need to pass through Oracle's buffer cache layer. It also maximizes parallelism for multi-block

IO. OCI Direct Path can also take

advantage of presorted data which helps if it needs to build indexes for a

table.

Running an OLH Job With OCI Direct Path

This pretty much looks the same as running a job with JDBC,

except that the reduce phase always executes (since the target table is

partitioned) , and it is much faster. For both JDBC and OCI Direct Path the actual loading of the Oracle

table occurs when the Reduce phase is 67% complete. For large loads approximating or exceeding a terabyte

you will see a big difference in the time spent in this phase. OCI Direct Path is much faster than JDBC.

Are You Balanced?

![Balanced]()

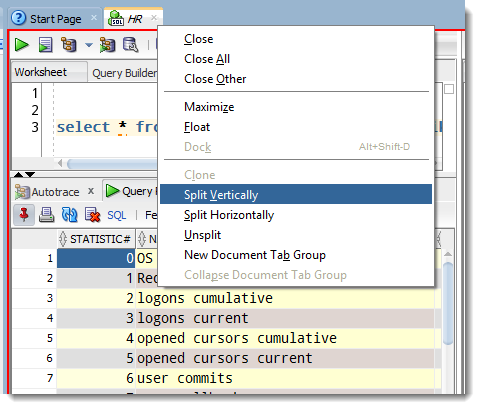

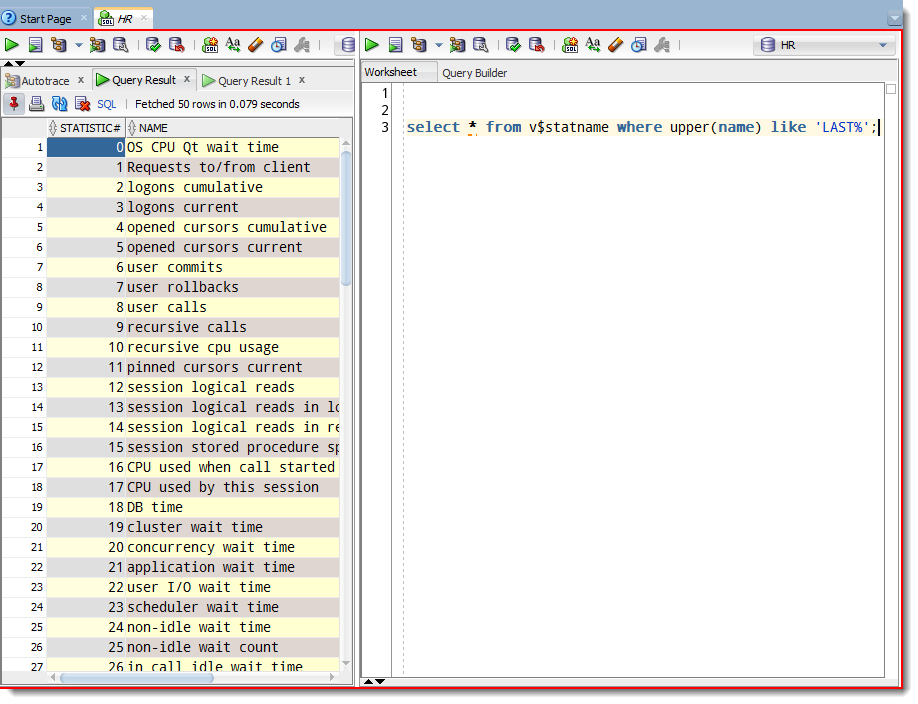

Rule 3: After running an OLH load, check out the Oraloader report to see if it is balanced.

After the run of a successful OLH job, the output directory

(specified by the “mapred.output.dir” property) generates an elegant report called “oraloader-report.txt”

that details the work done in the reduce phase. It identifies reducer tasks that ran and

associated statistics of their workload: bytes loaded, records loaded, and duration of the tasks (in seconds). If the load is not balanced, the values for

bytes and duration will vary significantly between reduce tasks, and you will want to make

adjustments.

Optimizing OLH and OCI Direct Path

Now we will discuss basic steps to optimize OLH using OCI Direct

Path:

· Choosing a good number for Reducer Tasks

· Enabling the OLH Sampler

· Finding the sweet spot for Hadoop Map Reduce

payloads

· If possible load using SDP transport protocol

Choosing a Number for Reducer Tasks

Rule 4: When using OCI Direct Path you want to choose the number of

reducer tasks to be close to a multiple of the number of reducer slots allocated on your

Hadoop cluster.

Reducer slots in Hadoop mean the number of processes that

can run in a Hadoop cluster at once, performing the reduce phase for an OLH

job. The Hadoop Map/Reduce

Administration UI displays this as Reduce Task Capacity. Typically you choose some multiple of the

number of reducer slots available. For example if the reduce task capacity in

the Hadoop cluster is 50, then a mapred.reduce.tasks value of 50 or 100 should

work well.

The purpose of this rule is to try to get reducers running

and loading at the same time, and to make sure all available slots are being

used. Not doing this can be

costly. For example, suppose there are

50 reducer slots but you set the number of reducer tasks to 51. If reduce loads are balanced then the 50

reducer slots will start and finish at roughly the same time, but you will to

wait for the singleton 51st task to run, which will double the time the reduce phase spends loading the data.

Rule 4 only works fully to your advantage when the data

sets are balanced (i.e. you are using the Sampler) and your OLH job is not

competing with other ongoing Map Reduce jobs that can steal reduce slots that

you were expecting to use. Note that Apache actually recommends a value close to a multiple of the number of reducer slots, for dealing with situations where reducers are not balanced.

This takes us to the next rule.

Rule 5: Always use the OLH Sampler.

The OLH Sampler

![Sampler]()

The OLH Sampler is an optional feature of OLH that does a

great job of balancing the workloads of reducer tasks when partitions are not balanced. (Note that the Sampler works with all OLH load methods, not just OCI Direct Path). You can control the Sampler manually by

setting the following property to “true” or “false” (for recent versions of OLH

the Sampler is turned on by default):

-D oracle.hadoop.loader.sampler.enableSampling=true

For example, suppose I had a customer table which was

partitioned using list partitioning representing the fifty states in the United

States. Most likely the partition

representing California will be much larger than the state of New Hampshire. Without enabling the OLH Sampler, a single

reducer task has the burden of publishing a whole partition. This means that one reducer will have to

publish California records while another will be tasked to publish the records

from New Hampshire. This will cause

skew, where some tasks have bigger workloads than others. The OLH Sampler addresses this pathology,

and breaks up large partitions into smaller equal sized units that can be

dispatched evenly across various reducer tasks.

The overhead of the OLH Sampler is very small for big data

payloads. A Hadoop Map Reduce job

typically takes minutes or hours, and the sampler overhead typically takes a

few seconds. (OLH console output tells

you at the outset if the Sampler is running and how much time it cost.) It runs at the beginning of the Map Reduce

job and samples the dataset to determine differences between partition sizes,

it then creates an partition strategy which balances the reduce load evenly.

Another pathology that the Samper addresses is when you have

more available reducer slots than partitions in your table. For instance suppose your table has 10

partitions but your Hadoop cluster has 50 reducer slots free. You would want to set the number of reduce

tasks to take advantage of all these reducer slots to speed up the load.

-D mapred.reduce.tasks=50

But without the Sampler enabled this tuning knob would not

have the desired effect. When the

Sampler is not enabled, partitions are restricted to a single reducer task,

which means that only 10 reducers will do real work, and the other 40 reduce slots will have

nothing to do.

Based on our experience the Sampler should be used virtually

all the time. The only situation to be

wary of is when the Hadoop input splits are clustered by the reduce key. (e.g. the input data living in HDFS

files is sorted by the value of the partition column). Under these circumstances loads might still

be unbalanced. The work-around for

clustered data is to force the Sampler to spend more time looking at the

distribution of data by looking at more splits. (By default it looks at at least five). This is done by using the following property

and setting <N > to a higher number.

-D

oracle.hadoop.loader.sampler.minSplits=<N>

Again the higher number will impose more Sampler overhead

at the beginning of the job but this should be rewarded with more efficient use

of Hadoop resources

Finding the Sweet Spot for Hadoop Map Reduce Payloads

Rule 6: Experiment with different sized payloads.

Hadoop is great technology that does a good job of making

sure that Map Reduce payloads scale. That being said, the resources of a Hadoop cluster are still finite, and

there is a breaking point where load sizes are simply too big. Hadoop’s scaling typically breaks down in the

reduce shuffle/sort stage where there is a tremendous amount of disk and

network IO going on within a Hadoop cluster to move sorted data to designated

systems where reducer tasks will do the actual loading. A telling sign is when you see your Hadoop job start to suffer from failed and

restarted task attempts in the reduce phase. The other

obvious sign is that when you double your payload, the time to process the load

is greater than a factor of 2.

It’s a good idea to spend some time experimenting with

different load sizes to see what your Hadoop configuration can handle. Obviously, if you break down a single big job into a series of smaller jobs, you will be paying a higher cost of overhead for starting up and tearing down multiple Map Reduce jobs. That being said, breaking down a 90 minute

OLH payload into three smaller 30 minute payloads is a perfectly reasonable strategy, since

the startup/teardown overhead for running each OLH job is still very small

compared to the total time running.

Use the SDP Protocol on Exadata and the BDA

![RaceCar]()

Rule 7: If you are using Oracle Exadata and Oracle BDA with Infiniband,

use SDP protocol.

SDP is a network transport protocol supported for loading

tables living in an Oracle Exadata machine with HDFS data living in Oracle BDA

(Big Data Appliance). Exadata and BDA

move data using Infiniband, which has very high throughput and low

latency. Because Infiniband has such

high bandwidth, it can create bottlenecks in conventional TCP sockets.

SDP is an alternative

networking protocol that uses RDMA technology which allows network interfaces

to move data packets directly into RAM without involving CPU. In other words it doesn’t reproduce the

network bottleneck that is seen when using TCP. In

performance test runs we’ve found that using SDP improves the load stage of an

OLH Direct Path by ten to twenty percent.

If you are running OLH Direct Path jobs using Infiniband,

you will want to take advantage of SDP. The way this is done is to configure Exadata listeners with an SDP port,

and assert an additional Oracle connection descriptor dedicated to SDP when

running OLH.

<property>

<name>oracle.hadoop.loader.connection.oci_url</name>

<value>

(HOST=192.168.40.200)(PORT=1523))(CONNECT_DATA=(SERVER=DEDICATED)(SERVICE_NAME=dbm)))

</value>

</property>This esoteric property isolates SDP usage only when OLH

reduce tasks create connections to Oracle to execute OCI Direct Path loading. All other network activity uses standard TCP connections.

Hopefully, we’ve come to understand the value of social listening and social monitoring. It’s how we as brands and organizations learn what people are saying about us across the social web, and how we get to know our customers intimately, learning their values and expectations. It’s what allows us to respond in timely, relevant ways, driving new customers, referrals, loyalty, and increased sales.

Hopefully, we’ve come to understand the value of social listening and social monitoring. It’s how we as brands and organizations learn what people are saying about us across the social web, and how we get to know our customers intimately, learning their values and expectations. It’s what allows us to respond in timely, relevant ways, driving new customers, referrals, loyalty, and increased sales.

Meet the Oracle WebCenter Team: Michael Snow, Director of Product Marketing

Meet the Oracle WebCenter Team: Michael Snow, Director of Product Marketing

![image[7][2][2][2] image[7][2][2][2]](http://soacommunity.files.wordpress.com/2013/04/image7222.png?w=20&h=20&h=20)

![clip_image002[8][4][2][2][2] clip_image002[8][4][2][2][2]](http://soacommunity.files.wordpress.com/2013/04/clip_image00284222.jpg?w=26&h=23&h=23)