Die Oracle Datenbank 12c steht nun schon seit knapp einen 3/4 Jahr zum Download zur Verfügung.

Seit dem Release veranstaltete die Deutsche DBA Community jeden Monat einen "Oracle Database Days" zu einem anderen Thema run um die 12c Datenbank.

Diese Tradition setzen wir auch im März fort, diesmal unter dem Motto "Hochverfügbarkeit".

In dieser halbtägigen Veranstaltung geht es rund um Backup & Recovery, RAC und Data Guard in Oracle 12c. Inklusive (aber nicht nur!) der Änderungen durch Pluggable Databases.

Die einzelnen Vortäge greifen auch bestehende Technologien auf und zeigen, wie 12c diese weiterentwickelt, aber durchaus schon jetzt eingesetzt werden können.

Veranstaltungsbeginn ist jeweils um 11:30 Uhr

Termine und Veranstaltungsorte:

• 18.03.2014: Oracle Customer Visit Center Berlin

• 25.03.2014: Oracle Niederlassung Stuttgart

• 27.03.2014: Oracle Niederlassung Düsseldorf

Die Teilnahme an der Veranstaltung ist kostenlos. Weitere Informationen zur Agenda und allen weiteren geplanten Oracle Database Days sowie die Möglichkeit zur Anmeldung finden Sie unter folgendem Link

http://tinyurl.com/odd12c

Informationen zur Anfahrt finden Sie zusätzlich unter Oracle Events auf www.oracle.com (Berlin, Stuttgart, Düsseldorf). Also am Besten gleich anmelden!

12c Oracle Days im März

JavaOne 2014 Call for Papers Now Open!

It is time to submit all those talks you have been thinking about. "We have a huge focus on community at this event, and it would be great to have many proposals from the developer community." explains JavaOne Content Chair Stephen Chin.

There is a new dedicated track for Agile development this year, making a total of nine Java tracks. This year's tracks are:

There is no time to waste! The call for papers closes April 15th at 11:59 p.m. PDT

There is a rolling submission process, so submit early!

Hitachi Transforms Human Capital Management Practices to Embrace Social Sourcing

By Alison Weiss - Originally posted on Profit

Talent sourcing and recruiting have always focused on connecting employers with skilled candidates, but today more organizations are taking advantage of social media to improve the chances of making a good match. In fact, the Society for Human Resource Management reported that 77 percent of organizations used social networking sites to recruit potential job candidates in 2012 compared to just 56 percent in 2011. And for 80 percent of respondents, one of the main reasons social sourcing is so appealing is that it provides an effective way to identify and recruit top talent – the very candidates who are often not in active job-seeking mode.

Recently, leaders at Dallas, Texas-based Hitachi Consulting, a subsidiary of Hitachi, Ltd., decided to transform its talent acquisition practices by embracing social sourcing. The Oracle PartnerNetwork Diamond-level partner provides IT consulting and management consulting services and has 5,000 consultants based around the world. Talented people are at the heart of the company’s success, but human resources executives knew it would be possible to improve employee recruitment and talent management by implementing Oracle Taleo Recruiting and Onboarding integrated with Oracle Taleo Social Sourcing. They decided to implement the solution for their US practice in 2012

“Being able to understand talent and talent movement across the organization and improve mobility is central to our mission to deliver innovation,” says Sona Manzo, vice president of Oracle Solutions-HCM Cloud at Hitachi Consulting. “And an integrated cloud-based talent management solution such as Taleo allows us to develop critical talent pools and assess, select, hire, and onboard that talent more effectively.”

Leveraging social sourcing through employees

Because Taleo is cloud-based, it is easy to use and accessible anywhere – desktop or mobile. It gives users the ability to push out information into the marketplace in real-time about jobs available at Hitachi Consulting. The company is using Taleo Social Sourcing to define targeted recruitment marketing campaigns that can be executed by individual employees using Twitter, LinkedIn, and Facebook. It is leveraging the platform so all Hitachi Consulting employees can be involved in recruiting. Recruiters report that employee access to social media gives them a much bigger reach to find the right talent.

Three years ago, Hitachi Consulting’s employee recruitment program was completely paper-based and labor-intensive. Because of the number of requisitions managed by recruiters, sometimes employee referrals simply got lost in the cracks, or lacked follow up, and there was no central repository of information.

“The best hires come from employee referrals because great employees want to work with other great people. And individuals brought in by employee referrals are more likely to have the same values, fit our culture better, and stay longer,” says Julie Jochems, director of talent acquisition for the US at Hitachi Consulting. “But we were not able to maximize our employee referrals due to our lack of integrated talent acquisition capabilities.”

Now, Taleo Social Sourcing helps Hitachi Consulting employees to make better use of their valuable networks to find high quality employee referrals. When the talent acquisition team sends out key marketing messages, the messages can be sent to a targeted list of Hitachi employees. From there, employees can select where they want to send the message. If they send it to all their LinkedIn contacts, for example, anyone who applies will be tagged and routed through Taleo’s applicant tracking system, which is linked to the broader Taleo talent management platform. This referral source tagging is retained regardless of how many times the position is shared or distributed by members of the employees’ social networks. Jochems and her team now have a clear idea of where referrals originate. Tracking functionality also makes it possible to quickly compare results to see which social media sites or job boards are generating the best referrals. The financial benefits are impressive; after the first year, Hitachi Consulting achieved more than US$1 million in employee referral cost savings.

“Our employee referrals have significantly improved. The volume of quality referrals has increased dramatically, those being referred are a better fit for our company overall, they are able to make an impact on our organization more quickly, and we are seeing our overall retention rate getting better over time,” says Jochems. “Before, we had a 17 percent employee referral rate. But in just a year’s time, that rate now averages 35 percent of our overall hiring. We have also seen a positive impact on employee engagement. The benefits have been dramatic.”

Streamlining the onboarding process

When Hitachi Ltd, Hitachi Consulting’s parent company, selected Taleo Enterprise Recruiting for the 323,000-plus organization, Hitachi Consulting provided core experts to the deployment team to help leverage key knowledge and act as early adopters. Hitachi Consulting continues to spearhead the deployment of social and mobile components of the suite, and is now live with Taleo Enterprise Recruiting, Social Sourcing and Transitions (onboarding), and has develop a global framework to support the rollout of Transitions through the broader Hitachi Ltd organization.

According to Manzo, the company is already seeing strategic and administrative benefits. Information gathered from job seekers during recruiting is held in a repository and is automatically accessed during employee onboarding. This eliminates the paperwork that is traditionally part of the onboarding process and reduces the risk of data errors. And because the onboarding process is more streamlined, it is easier to introduce new employees to Hitachi Consulting’s culture and to make them feel comfortable and included even prior to their start date.

Next Steps:

Video: Modern HCM is Social HCM: 2014 HCM World Keynote Address by Larry Ellison

Video: The Principal Grows Globally with Oracle Fusion Applications

Keep Informed! March 2014 Database Insider Newsletter

This edition includes the following and much more!

Get your copy today.

| |||

| Get the Most Out of Your Week at the COLLABORATE 14 Conference From

April 7 to 11, Oracle technology users from around the world will

gather in Las Vegas, Nevada, for COLLABORATE 14. Conference organizers

offer tips for how attendees can plan their agenda to get the most out

of their experience. Oracle Excellence Awards Honors Outstanding Data Warehouse Leaders and Database Administrators Every

year, Oracle customers and partners implement revolutionary solutions

using Oracle technology. To honor such pioneering work, Oracle has

conferred Oracle Excellence Awards on three data warehouse leaders and

three database administrators who have demonstrated a particularly high

degree of leadership and technical mastery. PCI Requirements Go into Effect Amid High-Profile Retail Data Breaches Major

breaches of retail customer data are making headlines just as the

latest version of the Payment Card Industry Data Security Standards go

into effect. Find out how Oracle's defense-in-depth strategy can help

you protect sensitive data. | ||

Oracle Again in Gartner Leaders Quadrant for 2014 BI and Analytic Platforms

Gartner issued the 2014 BI Platforms and Analytic Platforms Magic Quadrant placing Oracle in the Leaders quadrant again.

The Good News

The key aspects for Oracle BI partners is that Gartners sees acceleration in the trend “from BI systems used primarily for measurement and reporting to those that also support analysis, prediction, forecasting and optimization”: which is clearly good for us since all these capabilities can be deployed by Oracle partners with OBI Foundation Suite, Oracle Advanced Analytics and Real-Time Decisions.

Also, “the race is on to fill the gap in governed data discovery.”Endeca Information Discovery 3.1, and its’ integration to Oracle BI, addresses this Self-service requirement better than any of the competition – but be aware that Gartner did not consider this at the time of this 2014 report, since version 3.1 was not out in time for inclusion.

Oracle is a Leader for the 8th consecutive year. Top reasons cited include:

- Broad product suite that works with many heterogeneous data sources for large-scale, multi-business-unit and multi-geography deployments

- Integration with Oracle apps and technology, and with Oracle Hyperion EPM

- Prebuilt analytic applications – horizontal Oracle BI Applications and the many industry analytic applications built on Oracle BI

- Among the largest deployments in terms of numbers of users and data sizes

- 69% of Oracle BI customers consider it their enterprise BI standard

- Large, global network of partners

Oracle has secured distribution rights for this Gartner research. Get it here.

Preserving Unpacked Software During a Package Uninstall

I love it when I can wriggle out of the unintended side effects created by an automated system designed to simplify my life.

Here's a side effect created by the very good Image Packaging System (IPS) in our beloved Oracle Solaris 11. If you use the IPS to uninstall all packaged content from a directory, it also removes the directory. Not good if you also kinda sorta loaded unpackaged content into that directory.

For instance, let's say you worked with a third-party IPS package that installed its software into /usr/local. After a pause to polish the chrome on your custom Softail Deluxe, you install a second application into /usr/local from a tar file. What happens to that second application when you use IPS to remove the third-party IPS package from the /usr/local directory? Yup. IPS dumps the directory on the asphalt and high-sides the unpackaged content all the way to /var/pkg/lost+found.

Thank goodness somebody watches out for those of us who don't follow directions. Alta Elstad, from the Solaris Documentation Team at Oracle, is one of them. Here's how she suggests you avoid this problem.

How to Preserve the Directory

To prevent the packaged directory from being removed along with its content, package the directory separately. Create an IPS package that delivers only the one directory or directory structure that you want. Then that directory structure will remain in place until you uninstall that specific package. Uninstalling a different package that delivers content to that directory will not remove the directory.

Here's a detailed example.

- Create the directory structure you want to deliver. This example shows /usr/local. You could easily expand this to include /usr/local/bin and other subdirectories if necessary.

$ mkdir -p usrlocal/usr/local - Create the initial package manifest.

$ pkgsend generate usrlocal | pkgfmt > usrlocal.p5m.1 $ cat usrlocal.p5m.1 dir path=usr owner=root group=bin mode=0755 dir path=usr/local owner=root group=bin mode=0755 - Create a

pkgmogrifyinput file to add metadata and to exclude delivering /usr since that directory is already delivered by Oracle Solaris. You might also want to add transforms to change directory ownership or permissions.$ cat usrlocal.mog set name=pkg.fmri value=pkg://site/usrlocal@1.0 set name=pkg.summary value="Create the /usr/local directory." set name=pkg.description value="This package installs the /usr/local \ directory so that /usr/local remains available for unpackaged files." set name=variant.arch value=$(ARCH)<transform dir path=usr$->drop>

- Apply the changes to the initial manifest.

$ pkgmogrify -DARCH=`uname -p` usrlocal.p5m.1 usrlocal.mog | pkgfmt > usrlocal.p5m.2 $ cat usrlocal.p5m.2 set name=pkg.fmri value=pkg://site/usrlocal@1.0 set name=pkg.summary value="Create the /usr/local directory." set name=pkg.description value="This package installs the /usr/local \ directory so that /usr/local remains available for unpackaged files." set name=variant.arch value=$(ARCH)<transform dir path=usr$->drop>

-

Check your work.

$ pkglint usrlocal.p5m.2 Lint engine setup... Starting lint run... $

- Publish the package to your repository.

$ pkgsend -s yourlocalrepo publish -d usrlocal usrlocal.p5m.2 pkg://site/usrlocal@1.0,5.11:20140303T180555Z PUBLISHED

- Make sure you can see the new package that you want to install.

$ pkg refresh site $ pkg list -a usrlocal NAME (PUBLISHER) VERSION IFO usrlocal (site) 1.0 ---

- Install the package.

$ pkg install -v usrlocal Packages to install: 1 Estimated space available: 20.66 GB Estimated space to be consumed: 454.42 MB Create boot environment: No Create backup boot environment: No Rebuild boot archive: No Changed packages: site usrlocal None -> 1.0,5.11:20140303T180555Z PHASE ITEMS Installing new actions 5/5 Updating package state database Done Updating package cache 0/0 Updating image state Done Creating fast lookup database Done Reading search index Done Updating search index 1/1 - Make sure the package is installed.

$ pkg list usrlocal NAME (PUBLISHER) VERSION IFO usrlocal (site) 1.0 i-- $ pkg info usrlocal Name: usrlocal Summary: Create the /usr/local directory. Description: This package installs the /usr/local directory so that /usr/local remains available for unpackaged files. State: Installed Publisher: site Version: 1.0 Build Release: 5.11 Branch: None Packaging Date: March 3, 2014 06:05:55 PM Size: 0.00 B FMRI: pkg://site/usrlocal@1.0,5.11:20140303T180555Z $ ls -ld /usr/local drwxr-xr-x 2 root bin 2 Mar 3 10:17 /usr/local/

For More Information

- Packaging and Delivering Software With the Image Packaging System

- How to Create and Publish Packages to an IPS Repository on Oracle Solaris 11

About the Photograph

Photograph of Vancouver's laughing statues courtesy of BMK via Wikipedia Commons Creative Commons License 2.0

- Rick

Follow me on:

Blog | Facebook | Twitter | YouTube | The Great Peruvian Novel

Part III: WebLogic-Database Integration Podcast Series

Random or Sequential I/O? How to find out with DTrace

One of the perennial performance or sizing questions for a workload is how much I/O it generates. This can be broken down several ways:

- Network versus disk

- Read versus write

- Random versus sequential (for the disk component)

Many of the metrics have always been easy to quantify. There are standard operating system tools to measure disk I/O, network packets and bytes, etc. The question of how much random versus sequential I/O is much harder to answer, but it can be an important one if your storage is a potential limiter of performance and that storage has a significant amount of "conventional" disk - i.e. spindles of rotating rust.

Sequential I/O on conventional disks can generally be served at a higher throughput because conventional disks can avoid almost all rotational delay and seek time penalties. Random I/O will always incur these penalties, at a varying rate.

So if you need to decompose your workload enough to understand how much Random versus Sequential I/O there is, what do you do? You may be able to discuss it with the application developer to get their take on how the application behaves, what it stores, and how and when it needs to fetch data into memory or write or update it on disk. This information (or access to the developer) is not always available though.

What about if I can get inside the application and measure the I/O as it happens? That is a task for DTrace. I have developed a script that tallies up all I/O for all filesystems and can tell you how much of the I/O is Sequential (a read or write at a location in a file is then followed by a read or write at the next location for that file) or Random.

Here is some example output from the script, where I first ran a Sequential filebench test:

Sample interval: 120 seconds

End time: 2011 Mar 23 10:42:20

FYI: Page I/O on VREG vnodes after read/write:

fop_read fop_getpage 2

/u rd/s wr/s rdKB/s wrKB/s acc/look: 0

sequential 2616.20 0.62501 2676408 0.718235 readdir: 0random 2.65836 0.20834 2713.623 0.641998 geta/seta: 0

page 0.00833 0 0.033334 0 cr/rm: 0

TOTAL 2618.86 0.83334 2679122 1.360233 other: 2.86669 |

then I ran a random filebench test:

Sample interval: 60 seconds

End time: 2011 Mar 22 12:09:28

FYI: Page I/O on VREG vnodes after read/write:

fop_write fop_putpage 1

fop_read fop_getpage 5

fop_write fop_getpage 18

/u rd/s wr/s rdKB/s wrKB/s acc/look: 2.28871

sequential 157.036 161.446 1255.552 1118.578 readdir: 0random 20113.5 17119.0 160860.2 136919.0 geta/seta: 0

page 0 4.27672 0 17.10687 cr/rm: 0.05012

TOTAL 20270.6 17284.7 162115.7 138054.7 other: 5.26237 |

As you can see the script outputs a table breaking down reads and writes by operations and by KB/s, but also by "sequential", "random" and "page". There are also some totals, plus some statistics on a few other VFS operations.

What does "page" mean? Well, this script uses Solaris' virtual filesystem interface to measure filesystem I/O. Through this interface we can see conventional read and write operations, however there are also "page" operations that can happen which do not necessarily map to a read or write from an application. Because of this uncertainty I decided to list the I/O via these page operations separately. They will generally be small for an application that uses conventional I/O calls, but may be large if an application for is using the mmap() interface for example.

For the purposes of this blog entry I have simplified the output of the script. It normally outputs a table for every filesystem that has I/O during its run. The script could easily be modified to select a desired filesystem. It could also be modified to select I/O related to a specific user, process, etc.

References

Oracle Introduces In-Memory Cost Management Applications for Process and Discrete Industries

In 2013, Big Data” surpassed “Social Media” and “Mobility” as one of the most searched concepts on the Internet. Gerd Leonhard, a noted futurist, author and CEO of the Futures Agency, stated that Big Data’s economic and social importance will rival that of the oil economy by 2020. With the ever-increasing global adoption of smart phones, we are heading towards 5 billion connected devices. With the addition of other electronic devices such as sensors, wearables, and smart homes, that number is expected to grow to 50 billion by 2018. The amount of data being collected across all of these platforms is mind-boggling, and companies that realize the potential of what can be done with that data are breaking away from the pack. Those companies understand this potential and are now investing time and money into tools that can convert all that data into information that drives actionable insights. Oracle’s new In-Memory Cost Management applications for Oracle E-Business Suite offers those insights to for users in discrete manufacturing with Oracle In-Memory Cost Management for Discrete Industries and for users in process manufacturing with Oracle In-Memory Cost Management for Process Industries.

In 2013, Big Data” surpassed “Social Media” and “Mobility” as one of the most searched concepts on the Internet. Gerd Leonhard, a noted futurist, author and CEO of the Futures Agency, stated that Big Data’s economic and social importance will rival that of the oil economy by 2020. With the ever-increasing global adoption of smart phones, we are heading towards 5 billion connected devices. With the addition of other electronic devices such as sensors, wearables, and smart homes, that number is expected to grow to 50 billion by 2018. The amount of data being collected across all of these platforms is mind-boggling, and companies that realize the potential of what can be done with that data are breaking away from the pack. Those companies understand this potential and are now investing time and money into tools that can convert all that data into information that drives actionable insights. Oracle’s new In-Memory Cost Management applications for Oracle E-Business Suite offers those insights to for users in discrete manufacturing with Oracle In-Memory Cost Management for Discrete Industries and for users in process manufacturing with Oracle In-Memory Cost Management for Process Industries.

In today’s global manufacturing environment, manufacturers face a multitude of challenges due to regulatory compliance, stringent quality processes and increasing manufacturing costs. In order to accurately calculate margins and maximize profits, organizations must gather, maintain and analyze growing volumes of current and historical cost data. However, existing cost management solutions have not kept up with the exploding volumes of data across global manufacturing operations and various supply chains. To help solve this critical business need, Oracle has introduced Oracle In-Memory Cost Management for Discrete Industries for Oracle E-Business Suite and Oracle In-Memory Cost Management for Process Industries for Oracle E-Business Suite. Utilizing the extreme performance delivered by Oracle Engineered Systems, the new Oracle In-Memory Cost Management solutions are a combination of software and hardware that enables real-time insight across virtually all aspects of cost management, allowing organizations to maximize revenue, increase profits and optimize operational costs and working capital.

Oracle In-Memory Cost Management applications help businesses make decisions in time to capture the highest possible profits and margins, and to discover hidden opportunities to shrink operational costs. Cost accountants, operations, finance and procurement managers can use Oracle In-Memory Cost Management solutions to quickly perform what-if simulations on complex cost data and instantly visualize the impact of changes to their businesses. While existing cost management solutions often require long wait times for batch processes to complete on huge data sets, Oracle In-Memory Cost Management solutions run efficiently in real-time and come pre-built with critical analytical features including Cost Impact Simulator, Gross Profit Analyzer and Cost Comparison Tool.

Oracle In-Memory Cost Management applications help businesses make decisions in time to capture the highest possible profits and margins, and to discover hidden opportunities to shrink operational costs. Cost accountants, operations, finance and procurement managers can use Oracle In-Memory Cost Management solutions to quickly perform what-if simulations on complex cost data and instantly visualize the impact of changes to their businesses. While existing cost management solutions often require long wait times for batch processes to complete on huge data sets, Oracle In-Memory Cost Management solutions run efficiently in real-time and come pre-built with critical analytical features including Cost Impact Simulator, Gross Profit Analyzer and Cost Comparison Tool.

Oracle’s Cost Impact Simulator and Gross Profit Analyzer help organizations maximize revenue and increase profits by:

- Undertaking multidimensional cost analyses leveraging complex multi-level bills of material and routing data

- Perform detailed ‘what-if’ cost simulations and timely analyses of costs and related inventory valuations

- Easily assessing the impact of future margins including any potential downstream impact of unshipped orders and forecasted demand

Oracle’s Cost Comparison Tool enables users to quickly view and analyze the details of complex cost structures across multiple manufacturing locations so that businesses can reach timely decisions that allow them to identify the most profitable cost structures, simulate the enterprise-wide impact of cost changes and then propagate those savings across their enterprises. Oracle’s Cost Comparison Tool also helps businesses discover hidden opportunities to further shrink operational costs by processing and visualizing large volumes of cost element data quickly.

To help customers in discrete industries such as automotive, aerospace and defense, high tech and industrial manufacturing, Oracle In-Memory Cost Management for Discrete Industries’ transformational suite of applications allows businesses to drive strategic cost management objectives by maximizing gross margins and profits, optimizing product cost structures through minimizing component costs, creating profitable product mixes across their global operations and finding the right products to both increase penetration in existing markets and enter new markets. Oracle In-Memory Cost Management for Process Industries’ does the same thing for customers in process industries such as natural resources, life sciences, food and beverage, chemicals and consumer goods.

For more information on these products, please check out these links:

- Oracle In-Memory Applications

- Datasheet: Oracle In-Memory Cost Management for Discrete Industries

- Datasheet: Oracle In-Memory Cost Management for Process Industries

- Press Release: Oracle Drives Strategic Cost Management with New In-Memory Application for Oracle E-Business Suite

- Press Release: Oracle Announces New In-Memory Applications and Faster Performance for All Oracle Applications on Oracle Engineered Systems

- Supply Chain Management Review Article: Oracle In-Memory Cost Management Launched

- Forbes Article: President of Oracle Speaks on Big Data at Oracle Supply Chain Conference

BI apps 11.1.1.7.1 Cumulative Patch 1 is available now

BI applications 11.1.1.7.1 cumulative patch is available now.

Patch 17546336 - BIAPPS 11.1.1.7.1 ODI CUMULATIVE PATCH 1

(Patch) can be downloaded from My Oracle Support.

"To download patch , navigate to My Oracle Support> Patches & Update. Search with the patch number (17546336) on the Patch Name or Number field. Follow the instructions in the Readme to apply the patch."

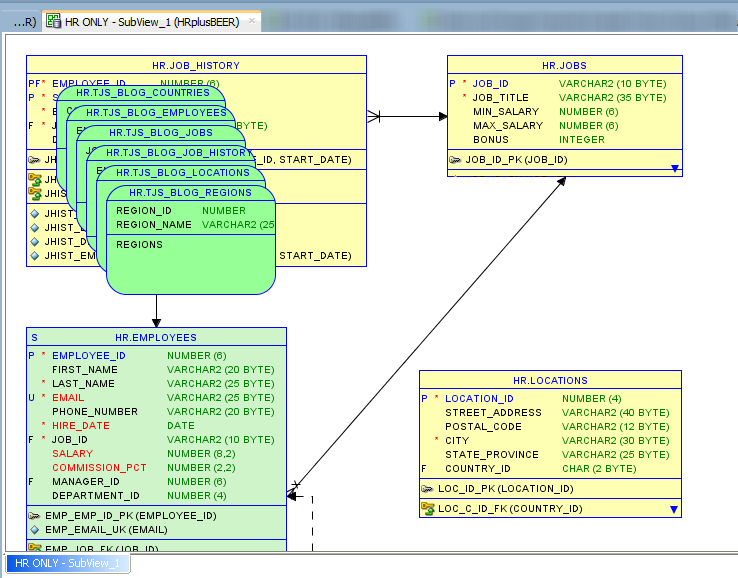

Adding and Removing Objects from a SQL Developer Data Modeler SubView

SubViews are a sub-collection of model objects. They are very useful for breaking up very large designs into smaller, easier to digest pieces. If you need to brush up on the topic a bit, try these posts.

I recently received a note from a user, we’ll call him ‘Galo’ – who was having problems figuring out how to add a table to an existing subview.

It actually took me a few minutes to figure out. And ‘Galo’ actually figured it out on his own as well. But just in case you lack the patience or time to go through this puzzle, let’s show how easy it is to add and remove objects to and from SubViews.

No Existing SubView – Create And Add Objects

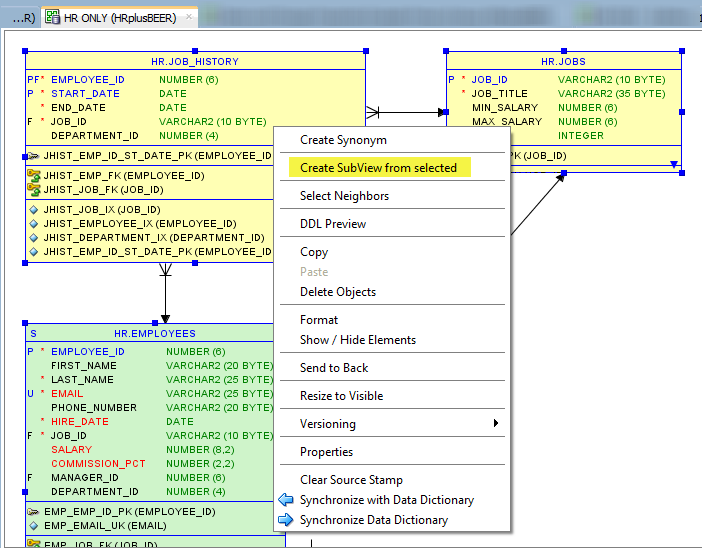

You can multi-select objects in the diagram, right-mouse-click, and say ‘Create SubView from selected.’

You can also Create a Subview from Neighbors – a nice trick/click off of the model tree. Basically allows you to pick one driving object, and say take this and any related objects and move to a subview.

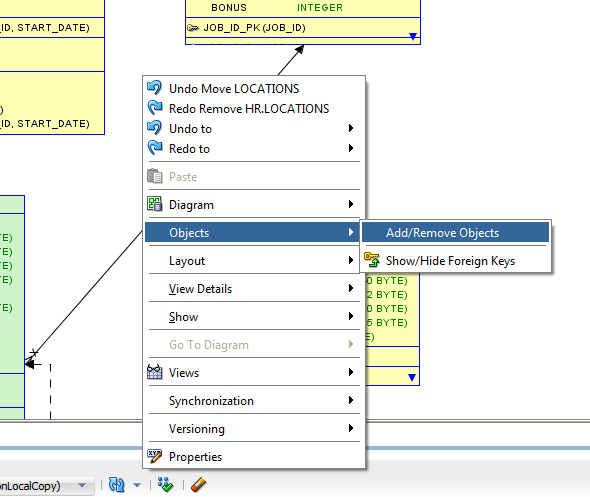

Add An Object to an Existing SubvView

This is where Galo and I got stuck. But it’s intuitive, once you do figure it out ![]()

Removing Objects from a SubView

Right-click on the object in the diagram, right-mouse-click, ‘Delete View.’ This will remove it from the SubView ONLY. If you say ‘Delete Object,’ it will remove it from the model entirely.

If you use the ‘Delete’ context menu from the SubView item in the tree – it again deletes the object from the design entirely! You can always UNDO this ![]()

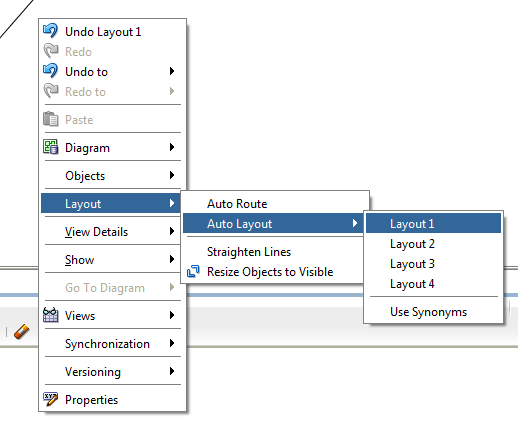

Version 4.0.1 Makes it a Bit Easier

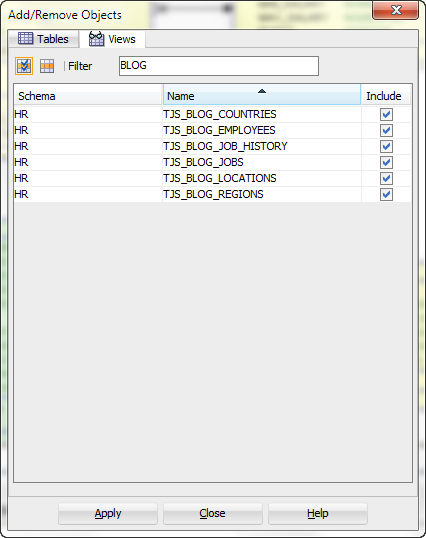

In the latest version, there’s a new dialog to manage what’s in and out for a SubvView.

And then you get this:

I’ve asked for every view with the text ‘BLOG’ in the name. I can then use the ‘Select All’ button and say ‘Apply.’ Voila!

Don’t forget you can use the ‘Auto Layout’ to arrange your diagram objects to a more pleasing set of coordinates. Just right-click in the diagram to try the 4 different automatic layouts.

Oracle Database 11.2.0.4 Certified with E-Business Suite 12.2

I'm very happy to announce the certification of Oracle Database 11.2.0.4 with Oracle E-Business Suite 12.2.

To begin planning your database upgrade to 11.2.0.4, you should review our documentation in this order:

- Interoperability Notes: Oracle E-Business Suite 12.2 with Oracle Database 11g Release 2 (Note 1623879.1)

- R12.2 Consolidated List of Patches and Technology Bug Fixes (Note 1594274.1) . The interoperability note references this note. It is crucialthat you review all requirements in Section 2.2, "Database 11.2.0.4 Patches and Bug Numbers" of this note.

- Database Initialization Parameters for Oracle E-Business Suite Release 12 (Note 396009.1). Make certain that you review Section 4, "Release-Specific Database Initialization Parameters For Oracle 11gR2" and Section 6, "Additional Database Initialization Parameters for Oracle E-Business Suite Release 12.2".

What does this mean if you are upgrading to R12.2?

- This upgrade is highly recommended for all EBS 12.2 environments.

- If you are just beginning your upgrade to R12.2 project, you should consider upgrading to Oracle Database 11.2.0.4 now.

- If your are currently in the midst of your R12.2 upgrade, you may continue as planned with Oracle Database 11.2.0.3 and upgrade to 11.2.0.4 as your R12.2 upgrade project and test plans permit.

- If your go-live date is soon, you may choose to continue as planned

with Oracle Database 11.2.0.3 and upgrade to 11.2.0.4 after you go-live

with 12.2.

It may be useful to review the the Oracle Database 11gR2 support policies and dates as part of your decision-making process:

- Lifetime Support Policy: Oracle Technology Products(PDF)

- Release Schedule of Current Database Releases (Note 742060.1)

Prerequisites

- 12.2.2 and higher

- Linux x86-64 (Oracle Linux 5, 6)

- Linux x86-64 (RH 5, 6)

- Linux x86-64 (SLES 10, 11)

- Oracle Solaris on SPARC (64-bit) (10, 11)

- Oracle Solaris on x86-64 (64-bit) (10, 11) - Database tier only

- IBM AIX on Power Systems (64-bit) (6.1, 7.1)

- HP-UX Itanium (11.31)

- Microsoft Windows x64 (64-bit)

- IBM: Linux on System z

The following database features and options are certified:

- Advanced Compression

- Active Data Guard

- Advanced Security Option (ASO) / Advanced Networking Option (ANO)

- Database Partitioning

- Data Guard Redo Apply with Physical Standby Databases

- Native PL/SQL compilation

- Oracle Label Security (OLS)

- Real Application Clusters (RAC)

- Real Application Testing

- Transparent Data Encryption (TDE) Column Encryption

- Transparent Data Encryption (TDE) Tablespace Encryption

- SecureFiles

- Virtual Private Database (VPD)

Pending Database Feature and Option Certifications

- Database Vault

About the pending certifications

Oracle's

Revenue Recognition rules prohibit us from discussing certification and

release dates, but you're welcome to monitor or subscribe to this blog

for updates, which I'll post as soon as soon as they're available.

References

- Interoperability Notes E-Business Suite Release 12.2 with Database 11g Release 2 (Note 1623879.1)

- Oracle E-Business Suite Release 12.2: Consolidated List of Patches and Technology Bug Fixes (Note 1594274.1)

- Database Initialization Parameters for Oracle Applications Release 12.2 (Note 396009.1 )

- Database Preparation Guidelines for an Oracle E-Business Suite Release 12.2 Upgrade (Note 1349240.1)

- Using Oracle 11g Release 2 Real Application Clusters with Oracle E-Business Suite Release 12.2 (Note 1453213.1)

- Using Oracle E-Business Suite Release 12.2 with a Database Tier Only Platform on 11g Release 2 (Note 1613707.1)

- Export/Import Process for Oracle E-Business Suite Release 12.2 Database Instances Using Oracle Database 11.2 (Note 1613716.1)

- Using TDE Column Encryption with Oracle E-Business Suite Release 12.2 (Note 1585696.1)

- Using TDE Tablespace Encryption with Oracle E-Business Suite Release 12.2(Note 1585296.1)

- Enabling SSL in Oracle Applications Release 12.2 (Note 1367293.1)

- Lifetime Support Policy: Oracle Technology Products(PDF)

- Release Schedule of Current Database Releases (Note 742060.1)

The preceding is intended to outline our general product direction. It is intended for information purposes only, and may not be incorporated into any contract. It is not a commitment to deliver any material, code, or functionality, and should not be relied upon in making purchasing decision. The development, release, and timing of any features or functionality described for Oracle's products remains at the sole discretion of Oracle.

The Path to a $16 Billion Acquisition? Build a Java ME App

As everyone saw in the press already, Facebook bought WhatsApp (maker of the startup mobile messaging app and service) for a hefty $16 billion. There was a lot of armchair analysis done just after the announcement, on how WhatsApp could be worth $16 billion. Here's a blog post (not mine) from the TextIt Blog, written by makers of the TextIt tool for SMS apps, that concludes the answer is Java ME technology (J2ME), and that Facebook bought WhatsApp for $16 billion because the WhatsApp Java ME port is available for feature phones, which if you look worldwide, still outnumber smartphones by a lot (a fact many developers and entrepreneurs overlook to their detriment).

As everyone saw in the press already, Facebook bought WhatsApp (maker of the startup mobile messaging app and service) for a hefty $16 billion. There was a lot of armchair analysis done just after the announcement, on how WhatsApp could be worth $16 billion. Here's a blog post (not mine) from the TextIt Blog, written by makers of the TextIt tool for SMS apps, that concludes the answer is Java ME technology (J2ME), and that Facebook bought WhatsApp for $16 billion because the WhatsApp Java ME port is available for feature phones, which if you look worldwide, still outnumber smartphones by a lot (a fact many developers and entrepreneurs overlook to their detriment).See: Here's a quote: So it finally happened, Facebook snatched up WhatsApp for the not so bargain price of $16B to the simultaneous head explosion of every entrepreneur in Silicon Valley. A common cry echoed around the world "But, but, how is WhatsApp any different than iMessage / Facebook Messenger / Hangouts?" To that, I have one answer: J2MESo, even though it might not happen the same way for your mobile startup, you might want to consider the analysis by the TextIt blog post, that if you want to think big for mobile, you should consider a Java ME port of your mobile app to make sure you cover the whole market, not just the smaller smartphone (iOS and Android) market. Apparently, Mark Zuckerberg and Facebook did...  |

SPARC T5-2 Delivers World Record 2-Socket SPECvirt_sc2010 Benchmark

Oracle's SPARC T5-2 server delivered a world record two-chip SPECvirt_sc2010 result of 4270 @ 264 VMs, establishing performance superiority in virtualized environments of the SPARC T5 processors with Oracle Solaris 11, which includes as standard virtualization products Oracle VM for SPARC and Oracle Solaris Zones.

The SPARC T5-2 server has 2.3x better performance than an HP BL620c G7 blade server (with two Westmere EX processors) which used VMware ESX 4.1 U1 virtualization software (best SPECvirt_sc2010 result on two-chip servers using VMware software).

The SPARC T5-2 server has 1.6x better performance than an IBM Flex System x240 server (with two Sandy Bridge processors) which used Kernel-based Virtual Machines (KVM).

This is the first SPECvirt_sc2010 result using Oracle production level software: Oracle Solaris 11.1, Oracle WebLogic Server 10.3.6, Oracle Database 11g Enterprise Edition, Oracle iPlanet Web Server 7 and Oracle Java Development Kit 7 (JDK). The only exception for the Dovecot mail server.

Performance Landscape

Complete benchmark results are at the SPEC website, SPECvirt_sc2010 Results. The following table highlights the leading two-chip results for the benchmark, bigger is better.

| SPECvirt_sc2010 Leading Two-Chip Results | |||

|---|---|---|---|

| System | Processor | Result @ VMs | Virtualization Software |

| SPARC T5-2 | 2 x SPARC T5, 3.6 GHz | 4270 @ 264 |

Oracle VM Server for SPARC 3.0 Oracle Solaris Zones |

| IBM Flex System x240 | 2 x Intel E5-2690, 2.9 GHz | 2741 @ 168 | Red Hat Enterprise Linux 6.4 KVM |

| HP Proliant BL6200c G7 | 2 x Intel E7-2870, 2.4 GHz | 1878 @ 120 | VMware ESX 4.1 U1 |

Configuration Summary

System Under Test Highlights:

1 TB memory

Oracle VM Server for SPARC 3.0

Oracle iPlanet Web Server 7.0.15

Oracle PHP 5.3.14

Dovecot 2.1.17

Oracle WebLogic Server 11g (10.3.6)

Oracle Database 11g (11.2.0.3)

Java HotSpot(TM) 64-Bit Server VM on Solaris, version 1.7.0_51

Benchmark Description

The SPECvirt_sc2010 benchmark is SPEC's first benchmark addressing performance of virtualized systems. It measures the end-to-end performance of all system components that make up a virtualized environment.

The benchmark utilizes several previous SPEC benchmarks which represent common tasks which are commonly used in virtualized environments. The workloads included are derived from SPECweb2005, SPECjAppServer2004 and SPECmail2008. Scaling of the benchmark is achieved by running additional sets of virtual machines until overall throughput reaches a peak. The benchmark includes a quality of service criteria that must be met for a successful run.

Key Points and Best Practices

The SPARC T5 server running the Oracle Solaris 11.1, utilizes embedded virtualization products as the Oracle VM for SPARC and Oracle Solaris Zones, which provide a low overhead, flexible, scalable and manageable virtualization environment.

In order to provide a high level of data integrity and availability, all the benchmark data sets are stored on mirrored (RAID1) storage.

See Also

- SPECvirt_sc2010 Results Page

- SPARC T5-2 Server

oracle.com OTN -

Oracle Solaris

oracle.com OTN -

Oracle Database

oracle.com OTN -

Oracle WebLogic Suite

oracle.com OTN -

Oracle Fusion Middleware

oracle.com OTN -

Java

oracle.com OTN

Disclosure Statement

SPEC and the benchmark name SPECvirt_sc are registered trademarks of the Standard Performance Evaluation Corporation. Results from www.spec.org as of 3/5/2014. SPARC T5-2, SPECvirt_sc2010 4270 @ 264 VMs; IBM Flex System x240, SPECvirt_sc2010 2741 @ 168 VMs; HP Proliant BL620c G7, SPECvirt_sc2010 1878 @ 120 VMs.

Announcing Sun Flash Accelerator F40 PCIe Card EOL

Announcing EOL for Sun Flash Accelerator F40 PCIe Card!

We're announcing End-of-Life (EOL) for the Sun Flash Accelerator F40 PCIe Flash Card March 4, 2014 with the Sun Flash Accelerator F80 PCIe Flash Card as its replacement. The Sun Flash Accelerator F40 Card has been replaced by a higher capacity Sun Flash Accelerator F80 Card!The Sun Flash Accelerator F80 Card that offers 2X the capacity at half the $/GB

NOW ALL SERVERS supporting the F40 (with the exception of the X3-2L) now support the F80 card

ACTION: Please direct your customers to the new, higher capacity F80 card!

Key dates are as follows:

Please read the Sales Bulletin on Oracle HW TRC for more details on this announcement.

(If you are not registered on Oracle HW TRC, clickhere ... and follow the instructions..)

Announcing 32 GB DDR3 DIMMs and 400 GB SSDs support for the Netra Server X3-2 system

Announcing 32 GB DDR3 DIMMs and 400 GB SSDs support for the Netra Server X3-2 system

Oracle announces the

availability of 32 GB DDR3 RDIMMs and 400 GB SSDs support on Netra

Server X3-2.

With 32 GB DIMMs, the Netra X3-2 Server has

effectively doubled the memory capacity to 512 GB. The additional

memory may be used to improve performance of individual applications

by retaining large amounts of data in memory, thereby eliminating the

latency associated with disk or network I/O.

Find more information in the Product Bulletinon Oracle HW TRC.

(If you are not registered on Oracle HW TRC, clickhere ... and follow the instructions..)

Announcing: EOL for SPARC SuperCluster T4-4 Half Rack to Full Rack Upgrade

Read the Product Bulletinon Oracle HW TRC for more details.

(If you are not registered on Oracle HW TRC, clickhere ... and follow the instructions..)

For More Information Go To:

Why Buy | How to Use | |

Oracle SuperCluster |

Mobile Web for the Masses

There’s no arguing that mobile is a big deal. We’ve all heard the stats about crazy mobile adoption over the last 4-5 years, particularly in retail. But one thing still isn’t totally clear: whether mobile Websites or native mobile apps are the way to go for retailers.

comScore cites that consumers spend 55% of their time with retailers on mobile devices, outpacing computers – and this isn’t general online surfing (which is far higher), it’s shopping. Some, including myself, argue that mobile is the lynchpin of a retailer’s omnichannel strategy. Smartphones perfectly link the in-store experience with the digital world – or at least, they should.

Aside from the challenges of supporting a mobile environment, there’s a lot of noise about how to take your mobile program to the next level.Since things in retail (and particularly mobile) move at lightening pace, our trip down memory lane will only encompass the last 4 years. Oracle (and the big analyst firms) used to tell retail customers to think about how mobile fits in to their larger strategy. We used to say things like “study how your valuable customers engage with mobile” or “give customers a mobile-specific, boutique app experience and make your brand more‘fun,’” or ask, “what part of the customer journey do you think mobile plays a part in?” This line of thinking isn’t relevant anymore.

Yes, there absolutely needs to be mobile-specific functionality and mobile-optimized experiences, but thinking about what “role” mobile plays at specific points in the customer journey is dated. We know that in today’s consumer-driven world, generalizations don’t drive a great customer experience or long-term loyalty.Shoppers want what they want at that moment, and they want retailers to know what personalized merchandising, offers and content are going to meet their needs. I think that we can all agree that thanks to smartphones, attention spans have gone from short to nonexistent. So – in mobile, you’d better deliver.

Now let’s get back to the mobile Web vs., native app conversation. Don’t get me wrong – I think apps can be a vital part of a retailer’s relationship with their most engaged customers. Having mobile apps as a retailer does not make sense for all, as apps must be built out and supported for different platforms, and will only hit a subset of the customer base (and just about zero first-time shoppers). However, native apps present unique opportunities to reward those who already do business with your brand. Shoppers who are going to download (and frequently use) a retailer's app likely fall in to the category of valuable loyalist (which on average, is about 25% of a retailer’s customer base). It’s a fantastic opportunity to drive adoption with offers, flash sales, and perhaps experimental features. But bottom line, it had better be special.

The reality is, that to deliver to the millions of shoppers with a sub-second smartphone attention span, mobile Web is the place to start. And in some cases, mobile Web is all you need.

Why? The questions that analyst firms and we used to ask retailers about the point(s) in the customer journey where mobile is used are now bunk. You can’t predict and you can’t generalize where and how shoppers will access mobile. That approach meant pigeon-holing mobile as part of a larger strategy, whereas today’s goal of omnichannel commerce is that touchpoints need to work together, interchangeably on the shopper’s terms. Mobile actions don’t fit in a box – neither do in-store or Website needs anymore.Predicting doesn’t work. This is why having a great mobile Website with zero access friction is critical.

All digital experiences must be linked – both from backend data and tooling perspectives, and in the customer-facing experience. The mobile Website cannot just be a watered-down version of the core desktop Website. Yes, it must be optimized for the footprint, like giving high visibility to the most popular mobile actions, accessible with the fewest number of finger taps.

When a shopper takes out their phone to interact with a retailer – whether to look at reviews in store, access their shopping cart loaded from their laptop, or begin and complete a transaction from their device, make sure everything is available, familiar, and easy to navigate. Having the same internal tooling running the digital experience across touchpoints will ensure that personalized merchandising and content that consumers want is available wherever they want it.

If your mobile Web site is not optimized, it’s far more hurtful to your brand than lack of a native app. Period.

This is why, for the masses (all of your loyal customers and the millions of anonymous shoppers out there) mobile Web is where it’s at. Sure, your most loyal customers may download an app, and that’s a great opportunity to extend a VIP experience, but what about acquiring new customers? What about shoppers who will never download an app? And what about mobile device fragmentation: how do you select which app(s) to build out and support if you don’t have the resources? Enter mobile Web. If a retailer’s goal is to sell to the masses, mobile Web is the greatest opportunity to do that.

Luxury retailer Molton Brown went with the mobile Web approach, launching their Oracle Commerce powered mobile Websites in North America, Europe, Australia and Japan. The awesome thing is that Molton Brown is extending the same tooling powering their core Website experience to the mobile channel, so experiences are connected, and internal management is simplified. We helped them take a lot of the guesswork out of developing the mobile experience with a reference store – helping them get live quickly with mobile best practices baked in to the product. It’s a great example of what the Molton Brown calls “another step in our strategy for providing a truly seamless omni-channel experience for our customers.”

Retail has come a long way in the short history of mobile commerce. And with mobile still in its infancy, more change is coming. But if retailers don’t have the budget or internal resources to run a double-pronged app(s) and mobile Web strategy, mobile Web gets retailers the biggest bang for their buck, greater usage, and increased loyalty over time.

Java 8 Launch!

It's finally almost here - Java 8, lambda this and lambda that. :)

Join Oracle and participants from the Java developer and partner communities for a live keynote and more than 35 screencasts, get involved, ask questions and learn how Java 8 can help you create the future. Don't miss this game changing event.

March 25 is just around the corner. Sign up here.

Internal Links

Another great question today, this time, from friend and colleague, Jerry the master house re-fitter. I think we are competing on who can completely rip and replace their entire house in the shortest time on their own. Every conversation we have starts with 'so what are you working on?' He's in the midst of a kitchen re-fit, Im finishing off odds and ends before I re-build our stair well and start work on my hidden man cave under said stairs. Anyhoo, his question!

Can you create a PDF document that shows a summary on the first page and provides links to more detailed sections further down in the document?

Why yes you can Jerry. Something like this? Click on the department names in the first table and the return to top links in the detail sections. Pretty neat huh? Dynamic internal links based on the data, in this case the department names.

Its not that hard to do either. Here's the template, RTF only right now.

The important fields in this case are the ones in red, heres their contents.

TopLink

<fo:block id="doctop" />

Just think of it as an anchor to the top of the page called doctop

Back to Top

<fo:basic-link internal-destination="doctop" text-decoration="underline">Back to Top</fo:basic-link>

Just a live link 'Back to Top' if you will, that takes the user to the doc top location i.e. to the top of the page.

DeptLink

<fo:block id="{DEPARTMENT_NAME}"/>Just like the TopLink above, this just creates an anchor in the document. The neat thing here is that we dynamically name it the actual value of the DEPARTMENT_NAME. Note that this link is inside the for-each:G_DEPT loop so the {DEPARTMENT_NAME} is evaluated each time the loop iterates. The curly braces force the engine to fetch the DEPARTMENT_NAME value before creating the anchor.

DEPARTMENT_NAME

<fo:basic-link internal-destination="{DEPARTMENT_NAME}"><?DEPARTMENT_NAME?></fo:basic-link>This is the link for the user to be able to navigate to the detail for that department. It does not use a regular MSWord URL, we have to create a field in the template to hold the department name value and apply the link. Note, no text decoration this time i.e. no underline.

You can add a dynamic link on to anything in the summary section. You just need to remember to keep link 'names' as unique as needed for source and destination. You can combine multiple data values into the link name using the concat function.

Template and data available here. Tested with 10 and 11g, will work with all BIP flavors.